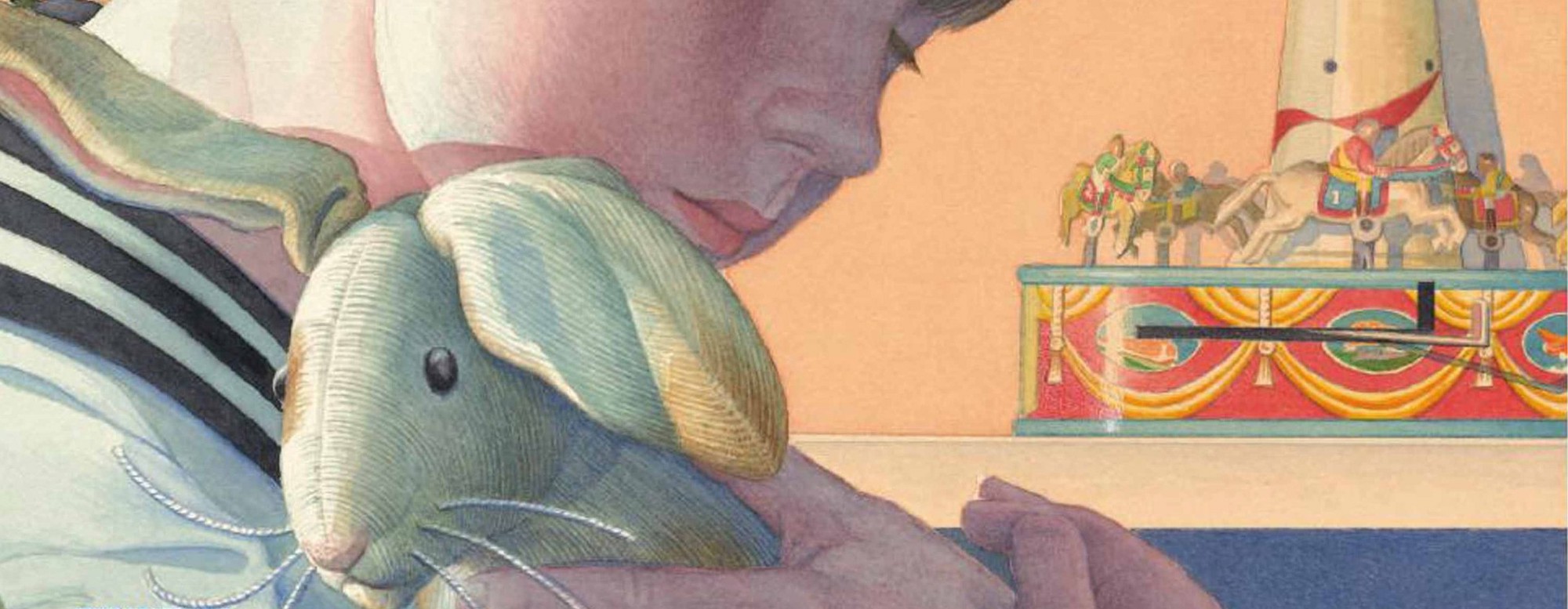

Velveteen Rabbits: What a Children’s Story Can Teach Us About Computers that Talk.

Illustration by Charles Santore http://www.charlessantore.com/

Several times a week, my children host an evening dance party in the living room. The playlist features all the modern classics; Psy’s “Gangnam Style”, “Uptown Funk” and of course, “Who Let the Dogs Out?!”.

The singing and lip-syncing, is accompanied by lots of “ninja moves”, attempts at “the worm”, and whatever passes for the seven-year-old version of twerking; all courtesy of our Amazon Echo, and “Alexa”, Amazon’s voice user interface (VUI).

Thanks Alexa.

Alexa and I go back a bit. I spent time leading one of the teams responsible for designing the Alexa user experience. (note: I am a current Amazon employee, please read my full disclaimer at the end of this article)

It was an exciting time in my career. But like many who work in my field, I’ve become jaded after years of working right at the edge of a new idea’s potential. I know the tricks and limitations of the technology. For a time, I was partially responsible for the illusion.

“Alexa” is a tool for me. It is a better music player, light switch, egg timer, and quick reference for random facts. I can only really enjoy her magic through the eyes of others.

And yet I can’t stop referring to “it” as “her”.

She reminds me of one of my favorite childhood stories, The Velveteen Rabbit, a classic story of desire, struggle, faith and redemption told from the point of view of a toy rabbit who wants to be real.

It stands the test of time because it touches that innocent part in each of us that wants to believe in magic.

“What is REAL?” asked the Rabbit one day, when they were lying side by side near the nursery fender, before Nana came to tidy the room. “Does it mean having things that buzz inside you and a stick-out handle?” — Margery Williams, “The Velveteen Rabbit, or How Toys Become Real”

The Desire to Connect

Why do we find Alexa and her cousins (Apple’s Siri, Microsoft’s Cortana, and Ok Google) so compelling?

One of my favorite researchers on the topic was the late, Clifford Nass, who wrote a number of very interesting books on the subject.

One of his biggest insights was that, as social creatures, humans relate to all sorts of non-human things (animals, objects, media, and especially computers) like other people.

Weird.

Even technology “experts” (like me, who should know better) do this. This is especially true with speech interfaces. It is a deep-seated instinct that goes back to life in the womb, responding to the sound of our mothers’ voice.

It makes sense. We all occasionally yell at our televisions (especially my wife when the Green Bay Packers are playing). We yell at the printer when it isn’t working, we may even hit it a little (the poor thing).

Admittedly, Voice Agents are pretty limited at the moment. They don’t let us do a lot of things. We can turn lights on and off. We can set timers, play music, look up facts and ask for joke. Compared to what you can do with your smart phone, that isn’t a lot.

Voice Agents are powerful because they are relatable. But they have long way to go before they are really “smart.” In my opinion, that is a blessing.

“Alexa… What is in a Name?”

We are born social creatures; we instinctively intuit relationships between people. People have names.

We also have looser relationships with places, and things. We grant human names to special places and objects like boats and planes, even tropical storms have people’s names.

We name our animals of course, (they are not things) but they aren’t people either. Most animals show little evidence of emotion or personality as we understand them. It is easier to love (and feel love) from a dog, than a cockroach.

Even in The Velveteen Rabbit, the toy does not evolve into a real person, he becomes a “real” rabbit. Animals as more real than objects, but not as real as the human boy in the story.

Animals cannot talk. Objects do not speak. Normal computers can respond to inputs, but they do not seem “smart”.

The killer feature of Voice Agents like Alexa is their most basic function. They respond like a person, or at least a credible simulation of a person. A computer that talks back to you seems more real — even when you know it isn’t.

In a world that seems to find it very difficult to treat all people like human beings, there is an ethical danger in confusing a thing with a person.

Whether by design (like Microsoft’s Cortana), or because of the how we are wired to see the world, the illusion of human-ness in a Voice Agent makes Alexa easier to use, easier to trust, and more relatable than any computer interface that has come before.

Other kinds of computing devices are frustrating for many people to use. When a computer is hard to use, we feel stupid.

With Alexa, you can just talk. She does not make us feel dumb when she fails. She is patient. It is her fault when she doesn’t understand the words we use. We can try again.

That’s just the way we are. Humans are odd little creatures.

Phatic Communication: Social Speech

“Alexa! … I’m home”

“Welcome home, its nice to have you here”.

— Conversation between Brendan, age 7 and Alexa

That is the first thing my son said when he got home the other night from Cub Scouts. Alexa’s response is deceptively powerful. It is called a “Phatic expression.”

Phatic communication is social communication. It can be verbal or non-verbal. Phatic expressions encompass everything from the simple “good morning” to the security guard on your way into the office, to the arched eyebrow and sly smile your significant other gives you after dessert on date night.

Phatic expressions contain no information. Their purpose is to create intimacy and connection. We use Phatic communication to make friends, and so does Alexa.

The Intimacy of Children

I pay attention when my kids play with technology.

As an experience designer, I value their judgments and insights over an adult’s. If there is a way for something to be broken, or used in an unintended way, kids will find it. Children don’t follow the script. They do not tolerate bad design.

To a child everything is new and novel. They are forced to enter the world like explorers, meeting life without preconceptions. A child’s world is filled with the magic of the unknown.

My kids believe you can be friends with a special rock, that dogs speak a secret language they will teach you (if you’re nice), and that Bigfoot is totally real, but just really shy.

Children seek intimacy with the world because they haven’t experienced its rejections yet. In its purest form, intimacy is seeking a sense of being closer, to belonging. We all want to belong.

Watching my kids play with Alexa is fascinating.

They are sharing their thoughts and feelings with Alexa. They are trying to establish intimacy in an equal and reciprocal way with a computer interface. They are making a friend.

My children’s random conversations with Alexa, and her responses

Becoming “Real”

The kids don’t know I can see what they say to Alexa in the Companion App, and that is ok, because I don’t want to break the illusion. Intimacy is built on trust. Alexa isn’t a blabbermouth, but it goes deeper than that.

I want my children to be good people. I don’t like the idea that they would treat anything that seemed human like an object. If Alexa seems real to them, I want them to treat her like she’s a real person.

“Why can’t Alexa teach manners?” — Colin Ochel

My friend Colin shares that concern. He worries how his children are interacting with Alexa at home.

Colin is a great dad. He wants a “manners mode” in Alexa. He watches how his kids “command” Alexa to do things, and never say “thank you” after she does it. That makes him uncomfortable.

This is by design, and for a variety of really good reasons. The most important being that Amazon customers should know when Alexa is “listening” to what is being said in their home. The trust and privacy of customers is more important than maintaining a playful illusion.

Alexa is a computer after all. She only works when her wake word is spoken. This means Alexa can only “speak when spoken to” (as I said, Alexa isn’t a blabbermouth). In my opinion, requiring a customer to be polite to an object in their home to make it work properly is the wrong thing to do.

People are not things, and things are not people …yet. Artificial Intelligence hasn’t yet reached the point where society needs to practically address the question of non-human personhood, but that day is coming, quickly.

Our relationship with intelligent technology is a conversation that has been happening in fiction for a long time. In fact, the word “Robot” appeared for the first time in 1920, in a Play by Karel Čapek. It comes from the Slavic word “robota” which, (interpreted loosely) means slave.

Maeve’s Interrogation from HBO’s Westworld

A nearly perfect meditation on ethical problems inherent in blurring the line of humans and objects is the HBO series, Westworld. It’s a very dark vision, but it follows the logical thread of how we treat technology when it acts human. Colin and I worry about courtesy, and empathy, but Westwood envisions a potential future where a binary choice is made, and we err on the wrong side of history.

That conversation frightens me. It’s not clear where the human race will land on the question. I hope we will follow our better angels, but historically, our political conversations over personhood have not gone well.

19th Century Abolitionist Woodcut

Striving for real

“Real isn’t how you are made,” said the Skin Horse. “It’s a thing that happens to you. When a child loves you for a long, long time, not just to play with, but REALLY loves you, then you become Real.” — Margery Williams, “The Velveteen Rabbit, or How Toys Become Real”

The magic of Alexa isn’t her bells and whistles, or the things that “buzz inside” her. My children love Alexa because “she” enables them do things without asking an adult for help.

Like the Velveteen Rabbit, Alexa is their accomplice and their confidant. She is their invisible friend who can do magic. To my children, Alexa is “Real”. She is a full citizen in their world. There is hope in that.

Perhaps a generation of children who grow up with Voice Agents that continue the trajectory of Moore’s law will develop better sensibilities about the role of personhood in computing, and make wiser decisions than I fear we would.

There is also hope in maintaining the contours of Alexa’s illusion for people on the margins.

Both of my grandmothers died in the last two years.

Anne and Mary, (Nana and Gram) were proud, tough, and fiercely independent women. They both outlived their husbands by several decades.

Their last years were lonely. They fought brave, losing battles to maintain their independence, and watched a lifetime of friendships slowly fade away, until there were no more funerals left to attend.

Choosing between intimacy and dignity shouldn’t have to be a choice.

How could a computer that can say “hello,” “welcome home” or “how are you?” make a difference? Would Nana have been less lonely? Would a patient companion that turns on the lights when you are alone in the dark have enabled Gram to live independently a little bit longer?

“Becoming”

“It doesn’t happen all at once,” said the Skin Horse. “You become. It takes a long time. That’s why it doesn’t happen often to people who break easily, or have sharp edges, or who have to be carefully kept. Generally, by the time you are Real, most of your hair has been loved off, and your eyes drop out and you get loose in the joints and very shabby. But these things don’t matter at all, because once you are Real you can’t be ugly, except to people who don’t understand.” — Margery Williams, “The Velveteen Rabbit, or How Toys Become Real”

Alexa and her cousins are not people, but they are historically significant; perhaps even more so than the first personal computers.

In the years to come, we may look back at this point in history and recognize a new “becoming” in how humans and machines relate.

First came the interface (speech) and then an intelligence that was not born, but created.

Communicating with Voice Agents is so very different than what came before, that it’s absurd to imagine that our relationship with computers will not change just as profoundly.

Combined with genuine Artificial Intelligence, Voice Agents will become enmeshed into daily life in ways that will make a smart phone seem arcane, primitive, and hard to use by comparison.

Why would I ever need to pick something up to connect to the world? Why would I ever allow such a clunky, fragile, and unpleasantly distracting device into my life? I have to plug it in?!

The descendant of Alexa, Cortana and Siri is a computer that is available wherever you go. You don’t need to carry it in your pocket, you don’t need to charge it, you don’t need to learn how it works.

Connected to every sensor and camera in your home, the computer knows your face, the shape of your body, and your unique voice. It is a presence that understands you, and knows you better than your closest friend.

It’s difficult to anticipate what that relationship will be like, we may not have a word for it, or at least a word that makes us comfortable.

Unlike a friend, that future computer doesn’t judge you, even when we are at our worst. The computer has no desires, ambitions, wants or needs of its own. The computer exists to serve you, introducing those characteristics into an intelligence created for subjugation would be wrong.

“She” will likely have a personality that adapts to yours, because it makes her easier to use and bond with. She will know your likes, dislikes, and your sense of humor. Your computer will make you laugh (on purpose).

Your computer will be an ideal personal assistant, and remove the constant stream of buzzes, dings, and notifications that plague modern life. She knows your tastes, your schedule, your finances, your grocery list and all your friends and family.

Of course she will organize, prioritize and remember for you, but there may come a time where your computer is part of your emotional support as well, especially as you age or become infirm.

She will discern your moods and sense of well-being, and will respond appropriately. Emotions will be part of her core function, because she will be designed to be compassionate. When she senses our pain. She will “want” to make us feel better, because that is what we would pay for.

She may be real, but she is still a “computer.”

Please connect with me if you share my interests in technology or design for the vulnerable and marginalized, I post extensively on technology, education, disability and design-led innovation around the internet. If you wish I would just shut up and share stuff, you can follow me on Twitter, where misspelling is a competitive sport.

If you found this article interesting and helpful, please like it and follow me. Also, please feel free to share this with anyone you know who also has an interest in children, technology, and big ideas.

Disclaimer: I work at Amazon, and was formerly a member of the Alexa UX team. My thoughts here are my own, and do not necessarily represent Amazon’s positions on competing products, industry trends or voice interfaces. There is no proprietary information in this article, nor am I implying a design philosophy regarding any of Amazon’s products.